TL;DR

The best creative testing structure balances control and automation. Use batch-based testing to let Meta optimize within groups while maintaining structured learning across batches for scalable results.

Key Takeaways

- 1Single-ad ad sets (SCAS) give clean data but waste budget at scale

- 2All creatives in one ad set saves spend but limits exploration

- 3Batch-based testing is the recommended middle ground for growth

- 4Performance comes from how you structure tests, not just what you test

- 5Let Meta optimize within batches while you control batch-level decisions

The Question Every Scaling Brand Faces

When scaling paid social seriously, one question inevitably comes up:

When testing creatives, should you use single-ad ad sets for full control, or multiple ads per ad set to let Meta optimize spend, even during testing?

On paper, both approaches make sense. In practice, they lead to very different outcomes in terms of learning speed, budget efficiency, and decision quality. Let's break down the three main strategies we see brands use today, and when each one actually makes sense.

The 3 Creative Testing Strategies

1. 100% Testing Control: Single Creative Ad Sets (SCAS)

This is the most "controlled" approach: one ad = one ad set = one budget.

Pros:

- You decide exactly how much spend each creative gets

- Every ad gathers enough data to make a clear decision

Cons:

- Budget is spent regardless of performance

- Underperforming creatives still consume the full budget

This works well when you need clean, isolated learnings, but it's inefficient when testing at scale.

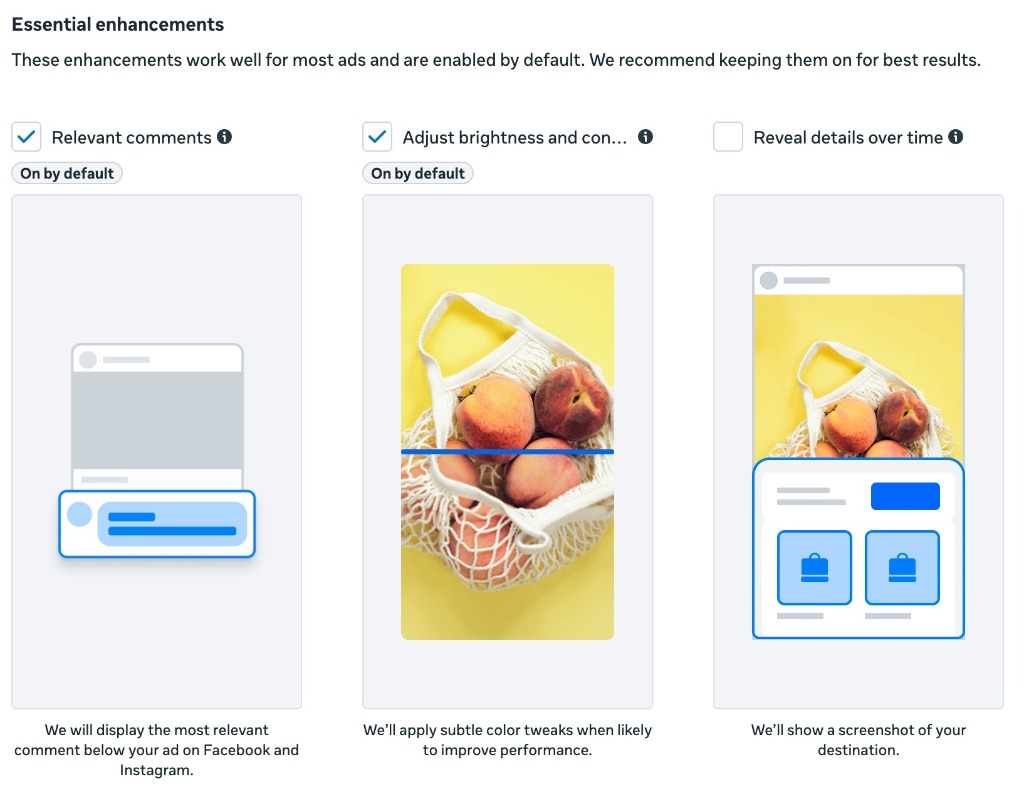

2. 100% Delegation: All Creatives in One Ad Set

At the other extreme, you place all creatives (sometimes up to 50) in a single ad set and let Meta arbitrate.

Pros:

- Meta optimizes spend automatically toward early winners

- More budget efficiency during the test phase

- This is the recommended strategy by Meta in terms of how their new algorithm, Andromeda, works

Cons:

- Some creatives may never really get a chance

- Early signals can bias allocation too fast

This favors spend efficiency, but can limit exploration and hide potential winners.

3. 50/50 Balance: Isolated Testing via Creative Batches (Recommended)

This hybrid approach balances exploration and exploitation.

- One new ad set per batch of new creatives, with budget defined at ad set level

- Each batch competes against others, but creatives inside a batch compete fairly

Pros:

- No need for a separate "scaling" budget upfront

- Better balance between learning and optimization

Cons:

- Decisions take slightly longer than pure SCAS

This is often the best compromise for brands testing frequently while preparing for scale.

What Each Strategy Means for Your Workflow

| Strategy | Control | Efficiency | Best For | |----------|---------|------------|----------| | SCAS | High | Low | Clean A/B tests, small budgets | | All in One | Low | High | Established creative types, scaling | | Batch Testing | Medium | Medium | Continuous testing, growth phase |

Conclusion

Creative testing isn't about choosing between control or automation. It's about designing a structure that lets Meta optimize without destroying your ability to learn.

- SCAS gives you clean data, but wastes budget when you test at scale

- All creatives in one ad set saves spend, but sacrifices exploration and long-term winners

- Batch-based testing creates the right tension between the two: enough freedom for the algorithm, enough structure for you to make confident decisions

If you're testing creatives continuously and want a smooth path from testing → pre-scale → scale, the batch approach is usually the most resilient setup.

In paid social, performance rarely comes from *what* you test alone—it comes from how you structure the test.

That's what turns creative testing into a growth system, not just an experiment.